[ad_1]

|

Climate forecasting, genome sequencing, geoanalytics, computational fluid dynamics (CFD), and different varieties of high-performance computing (HPC) workloads can reap the benefits of large quantities of compute energy. These workloads are sometimes spikey and massively parallel, and are utilized in conditions the place time to outcomes is crucial.

Previous Means

Governments, well-funded analysis organizations, and Fortune 500 firms make investments tens of thousands and thousands of dollars in supercomputers in an try to achieve a aggressive edge. Constructing a state-of-the-art supercomputer requires specialised experience, years of planning, and a long-term dedication to the structure and the implementation. As soon as constructed, the supercomputer should be saved busy as a way to justify the funding, leading to prolonged queues whereas jobs wait their flip. Including capability and benefiting from new know-how is dear and can be disruptive.

New Means

It’s now attainable to construct a digital supercomputer within the cloud! As a substitute of committing tens of thousands and thousands of dollars over the course of a decade or extra, you merely purchase the sources you want, clear up your downside, and launch the sources. You will get as a lot energy as you want, while you want it, and solely while you want it. As a substitute of force-fitting your downside to the accessible sources, you determine what number of sources you want, get them, and clear up the issue in essentially the most pure and expeditious method attainable. You do not want to make a decade-long dedication to a single processor structure, and you may simply undertake new know-how because it turns into accessible. You possibly can carry out experiments at any scale with out long run dedication, and you may acquire expertise with rising applied sciences comparable to GPUs and specialised for machine studying coaching and inferencing.

High500 Run

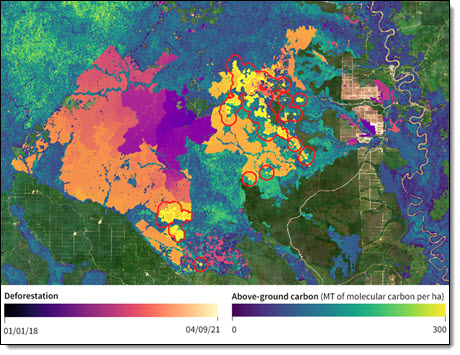

Descartes Labs optical and radar satellite tv for pc imagery evaluation of historic deforestation and estimated forest carbon loss for a area in Kalimantan, Borneo.

Descartes Labs optical and radar satellite tv for pc imagery evaluation of historic deforestation and estimated forest carbon loss for a area in Kalimantan, Borneo.

AWS buyer Descartes Labs makes use of HPC to know the world and to deal with the flood of knowledge that comes from sensors on the bottom, within the water, and in house. The corporate has been cloud-based from the beginning, and focuses on geospatial purposes that always includes petabytes of knowledge.

CTO & Co-Founder Mike Warren informed me that their intent is to by no means be restricted by compute energy. Within the early days of his profession, Mike labored on simulations of the universe and constructed a number of clusters and supercomputers together with Loki, Avalon, and House Simulator. Mike was one of many first to construct clusters from commodity , and has realized quite a bit alongside the best way.

After retiring from Los Alamos Nationwide Lab, Mike co-founded Descartes Labs. In 2019, Descartes Labs used AWS to energy a TOP500 run that delivered 1.93 PFLOPS, touchdown at place 136 on the TOP500 checklist for June 2019. That run made use of 41,472 cores on a cluster of C5 situations. Notably, Mike informed me that they launched this run with none assist from or coordination with the EC2 crew (as a result of Descartes Labs routinely runs manufacturing jobs of this magnitude for his or her clients, their account already had sufficiently excessive service quotas). To be taught extra about this run, learn Thunder from the Cloud: 40,000 Cores Working in Live performance on AWS. That is my favourite a part of that story:

We had been granted entry to a gaggle of nodes within the AWS US-East 1 area for about $5,000 charged to the corporate bank card. The potential for democratization of HPC was palpable for the reason that value to run customized at that pace might be nearer to $20 to $30 million. To not point out a 6–12 month wait time.

After the success of this run, Mike and his crew determined to work on an much more substantial one for 2021, with a goal of seven.5 PFLOPS. Working with the EC2 crew, they obtained an EC2 On-Demand Capability Reservation for a 48 hour interval in early June. After some “small” runs that used simply 1024 situations at a time, they had been able to take their shot. They launched four,096 EC2 situations (C5, C5d, R5, R5d, M5, and M5d) with a complete of 172,692 cores. Listed below are the outcomes:

- Rmax – 9.95 PFLOPS. That is the precise efficiency that was achieved: Virtually 10 quadrillion floating level operations per second.

- Rpeak – 15.11 PFLOPS. That is the theoretical peak efficiency.

- HPL Effectivity – 65.87%. The ratio of Rmax to Rpeak, or a measure of how effectively the is utilized.

- N: 7,864,320 . That is the dimensions of the matrix that’s inverted to carry out the High500 benchmark. N2 is about 61.84 trillion.

- P x Q: 64 x 128. That is is a parameter for the run, and represents a processing grid.

This run sits at place 41 on the June 2021 TOP500 checklist, and represents a 417% efficiency enhance in simply two years. When in comparison with the opposite CPU-based runs, this one sits at place 20. The GPU-based runs are actually spectacular, however rating them individually makes for the most effective apples-to-apples comparability.

Mike and his crew had been very happy with the outcomes, and imagine that it demonstrates the facility and worth of the cloud for HPC jobs of any scale. Mike famous that the Pondering Machines CM-5 that took the highest spot in 1993 (and made a visitor look in Jurassic Park) is definitely slower than a single AWS core!

The run wrapped up at 11:56 AM PST on June 4th. By 12:20 PM, simply 24 minutes later, the cluster had been taken down and all the situations had been stopped. That is the facility of on-demand supercomputing!

Think about a Beowulf Cluster

Again within the early days of Slashdot, each submit that referenced some then-impressive piece of would invariably embody a remark to the impact of “Think about a Beowulf cluster.” Immediately, you’ll be able to simply think about (after which launch) clusters of nearly any measurement and use them to deal with your large-scale computational wants.

You probably have planetary-scale issues that may profit from the pace and adaptability of the AWS Cloud, it’s time to put your creativeness to work! Listed below are some sources to get you began:

Congratulations

I want to provide my congratulations to Mike and to his crew at Descartes Labs for this superb achievement! Mike has labored for many years to show to the world that mass-produced, commodity and software program can be utilized to construct a supercomputer, and the outcomes greater than converse for themselves.

To be taught extra about this run and about Descartes Labs, learn Descartes Labs Achieves #41 in TOP500 with Cloud-based Supercomputing Demonstration Powered by AWS, Signaling New Period for Geospatial Information Evaluation at Scale.

— Jeff;

[ad_2]

Source link