[ad_1]

For every consumer request, the code checks if the present app occasion is already linked to the suitable tenant database. If not, it creates a brand new connection. That is referred to as lazy initialization.

When an utility is beginning, it faces a selection: ought to it hook up with the database instantly (keen initialization), or anticipate the primary incoming consumer request (lazy initialization)? This query is vital when your app makes use of autoscaling in Cloud Run or in Google Kubernetes Engine (GKE), as a result of new situations shall be often began.

Normally I want keen initialization, as a result of it improves responsiveness. When the primary request is processed, the database is already linked. This protects a number of tons of of milliseconds in response time for that first request.

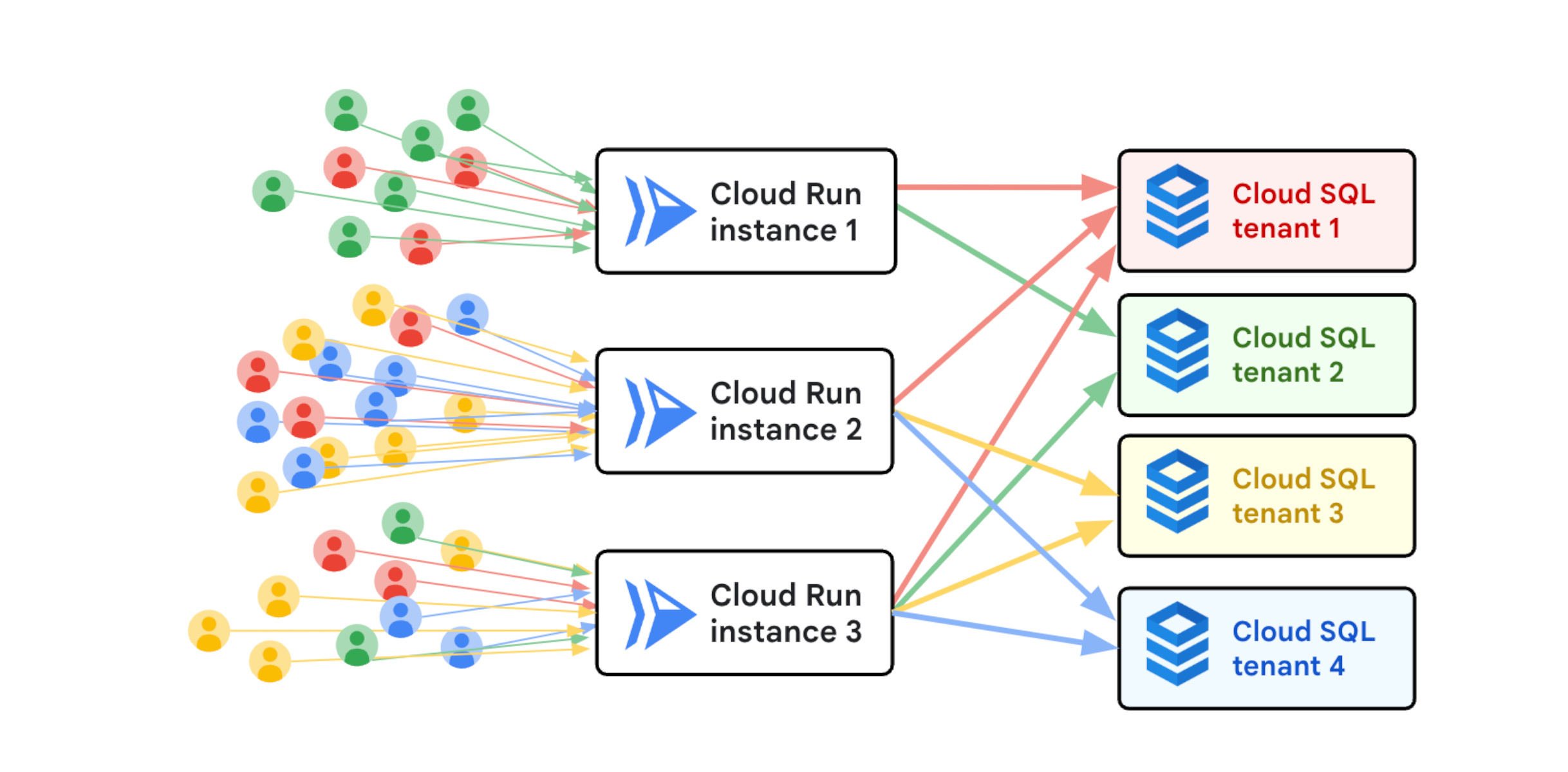

For the Nexuzhealth case with multi-tenancy, lazy initialization is extra acceptable. It is smart for the server to connect with the database on-demand, and save on startup latency which is vital for autoscaling pace.

We anticipated the connection to every tenant database to be created solely as soon as by each app occasion, after which be reused in subsequent requests for a similar tenant. We didn’t see any errors or irregular messages within the logs.

With this algorithm, the app works appropriately however has very unhealthy efficiency each time a brand new occasion is began. What’s occurring? Let’s take a more in-depth have a look at the code snippet once more.

The dbmap knowledge construction is shared by many threads that deal with requests. When coping with shared state in an app occasion that handles many requests concurrently, we should guarantee correct synchronization to keep away from knowledge races. This code makes use of an RW Lock. However there are two issues with the pseudo code above.

First, the slowest operation is createConnection() which can take over 200ms. This operation is executed whereas holding the RW lock in write mode, which signifies that at most one database connection may be created concurrently by the server. That is perhaps suboptimal, nevertheless it was not the basis reason behind the efficiency challenge.

Second, and extra importantly, there’s a TOCTOU downside within the code above: between studying the map and creating the connection, the connection could have been already created by one other request. This can be a race situation!

Race situations may be uncommon and troublesome to breed. On this case the issue was occurring each time, due to an unlucky succession of occasions:

- The appliance is serving visitors.

- Extra visitors arrives, rising the load of the server situations.

- The autoscaler triggers the creation of a brand new occasion.

- As quickly because the occasion is able to deal with HTTP requests, many requests are instantly routed to the brand new occasion. They sound like a thundering herd!

- A number of requests learn the empty map, and create a db connection.

- Just one single request acquires the lock in write mode, and wishes roughly 200ms to create a database connection earlier than releasing the lock once more.

- Throughout that point, all different requests are blocked, ready to amass the lock.

- When a second (or subsequent) request lastly will get the lock in write mode, it’s unaware that the map has modified (it already checked that the map was empty), and it creates its personal new, redundant connection.

All of the requests have been doing an extended operation, and have been successfully ready on one another, which explains the excessive latencies we noticed. Within the worst case, the app could also be opening so many connections that the database server would begin refusing them.

Resolution

A doable resolution was to test the map once more simply after buying the lock in write mode, which is a suitable type of double-checked locking.

[ad_2]

Source link