[ad_1]

Generative AI is now not only a buzzword or one thing that’s simply “tech for tech’s sake.” It’s right here and it’s actual, immediately, as small and enormous organizations throughout industries are adopting generative AI to ship tangible worth to their workers and prospects. This has impressed and refined new methods like immediate engineering, retrieval augmented technology, and fine-tuning so organizations can efficiently deploy generative AI for their very own use circumstances and with their very own knowledge. We see innovation throughout the worth chain, whether or not it’s new basis fashions or GPUs, or novel purposes of preexisting capabilities, like vector similarity search or machine studying operations (MLOps) for generative AI. Collectively, these quickly evolving methods and applied sciences will assist organizations optimize the effectivity, accuracy, and security of generative AI purposes. Which implies everybody may be extra productive and inventive!

We additionally see generative AI inspiring a wellspring of latest audiences to work on AI initiatives. For instance, software program builders which will have seen AI and machine studying because the realm of knowledge scientists are getting concerned within the choice, customization, analysis, and deployment of basis fashions. Many enterprise leaders, too, really feel a way of urgency to ramp up on AI applied sciences to not solely higher perceive the chances, however the limitations and dangers. At Microsoft Azure, this growth in addressable audiences is thrilling, and pushes us to supply extra built-in and customizable experiences that make accountable AI accessible for various skillsets. It additionally reminds us that investing in training is crucial, so that every one our prospects can yield the advantages of generative AI—safely and responsibly—irrespective of the place they’re of their AI journey.

Now we have lots of thrilling information this month, a lot of it targeted on offering builders and knowledge science groups with expanded selection in generative AI fashions and larger flexibility to customise their purposes. And within the spirit of training, I encourage you to take a look at a few of these foundational studying assets:

For enterprise leaders

- Constructing a Basis for AI Success: A Chief’s Information: Learn key insights from Microsoft, our prospects and companions, business analysts, and AI leaders to assist your group thrive in your path to AI transformation.

- Remodel your corporation with Microsoft AI: On this 1.5-hour studying path, enterprise leaders will discover the information and assets to undertake AI of their organizations. It explores planning, strategizing, and scaling AI initiatives in a accountable means.

- Profession Necessities in Generative AI: On this Four-hour course, you’ll study the core ideas of AI and generative AI performance, how one can begin utilizing generative AI in your personal day-to-day work, and issues for accountable AI.

For builders

- Introduction to generative AI: This 1-hour course for newcomers will assist you to perceive how LLMs work, the right way to get began with Azure OpenAI Service, and the right way to plan for a accountable AI answer.

- Begin Constructing AI Plugins With Semantic Kernel: This 1-hour course for newcomers will introduce you to Microsoft’s open supply orchestrator, Semantic Kernel, and the right way to use prompts, semantic features, and vector databases.

- Work with generative AI fashions in Azure Machine Studying: This 1-hour intermediate course will assist you to perceive the Transformer structure and the right way to fine-tune a basis mannequin utilizing the mannequin catalog in Azure Machine Studying.

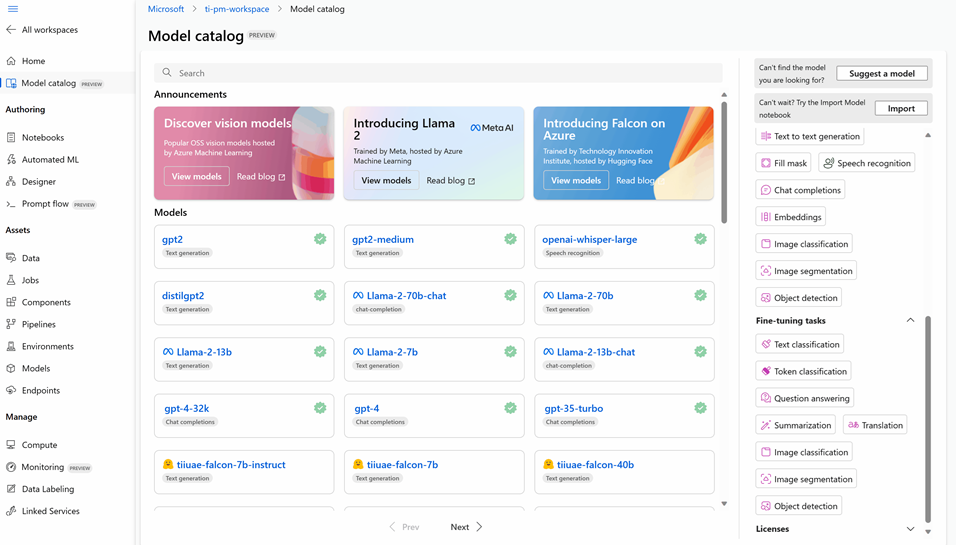

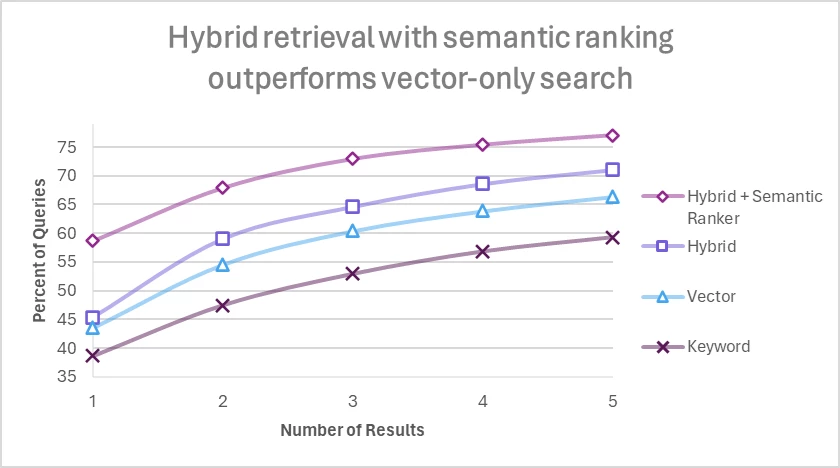

Entry new, highly effective basis fashions for speech and imaginative and prescient in Azure AI

We’re continuously searching for methods to assist machine studying professionals and builders simply uncover, customise, and combine giant pre-trained AI fashions into their options. In Could, we introduced the general public preview of basis fashions within the Azure AI mannequin catalog, a central hub to discover collections of assorted basis fashions from Hugging Face, Meta, and Azure OpenAI Service. This month introduced one other milestone: the public preview of a various suite of latest open-source imaginative and prescient fashions within the Azure AI mannequin catalog, spanning picture classification, object detection, and picture segmentation capabilities. With these fashions, builders can simply combine highly effective, pre-trained imaginative and prescient fashions into their purposes to enhance efficiency for predictive upkeep, sensible retail retailer options, autonomous autos, and different pc imaginative and prescient situations.

In July we introduced that the Whisper mannequin from OpenAI would even be coming to Azure AI providers. This month, we formally launched Whisper in Azure OpenAI Service and Azure AI Speech, now in public preview. Whisper can transcribe audio into textual content in an astounding 57 languages. The muse mannequin may translate all these languages to English and generate transcripts with enhanced readability, making it a robust complement to present capabilities in Azure AI. For instance, through the use of Whisper along side the Azure AI Speech batch transcription software programming interface (API), prospects can shortly transcribe giant volumes of audio content material at scale with excessive accuracy. We stay up for seeing prospects innovate with Whisper to make info extra accessible for extra audiences.

Operationalize software improvement with new code-first experiences and mannequin monitoring for generative AI

As generative AI adoption accelerates and matures, MLOps for LLMs, or just “LLMOps,” might be instrumental in realizing the total potential of this know-how at enterprise scale. To expedite and streamline the iterative technique of immediate engineering for LLMs, we launched our immediate movement capabilities in Azure Machine Studying at Microsoft Construct 2023— offering a method to design, experiment, consider, and deploy LLM workflows. This month, we introduced a brand new code-first immediate movement expertise by way of our SDK, CLI, and VS Code extension out there in preview. Now, groups can extra simply apply fast testing, optimization, and model management methods to generative AI initiatives, for extra seamless transitions from ideation to experimentation and, in the end, production-ready purposes.

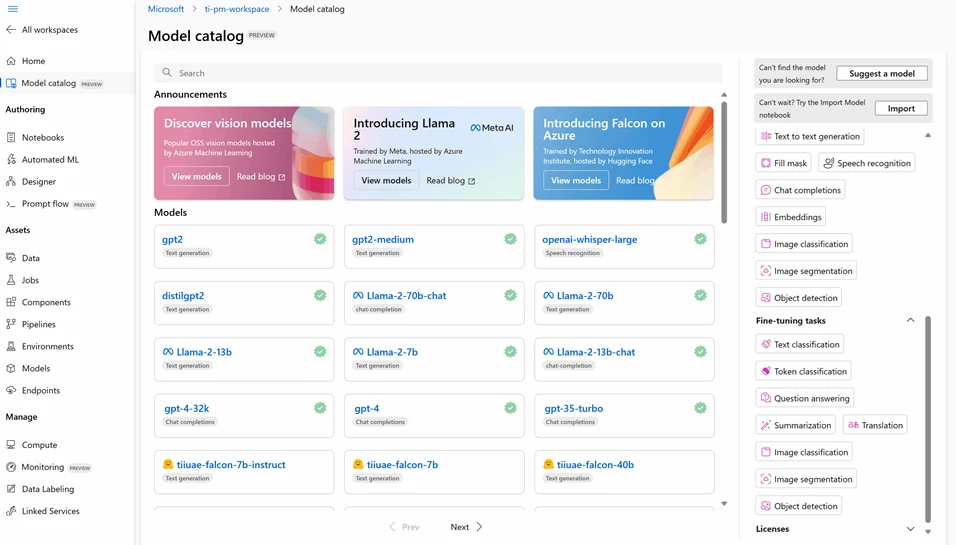

In fact, when you deploy your LLM software in manufacturing, the job isn’t completed. Modifications in knowledge and shopper conduct can affect your software over time, leading to outdated AI techniques, which negatively impression enterprise outcomes and expose organizations to compliance and reputational dangers. This month, we introduced mannequin monitoring for generative AI purposes, now out there in preview in Azure Machine Studying. Customers can now gather manufacturing knowledge, analyze key security, high quality, and token consumption metrics on a recurring foundation, obtain well timed alerts about important points, and visualize the outcomes over time in a wealthy dashboard.

Enter the brand new period of company search with Azure Cognitive Search and Azure OpenAI Service

Microsoft Bing is remodeling the way in which customers uncover related info internationally extensive net. As an alternative of offering a prolonged checklist of hyperlinks, Bing will now intelligently interpret your query and supply the very best solutions from varied corners of the web. What’s extra, the search engine presents the knowledge in a transparent and concise method together with verifiable hyperlinks to knowledge sources. This shift in on-line search experiences makes web shopping extra user-friendly and environment friendly.

Now, think about the transformative impression if companies might search, navigate, and analyze their inside knowledge with an analogous degree of ease and effectivity. This new paradigm would allow workers to swiftly entry company information and harness the ability of enterprise knowledge in a fraction of the time. This architectural sample is named Retrieval Augmented Technology (RAG). By combining the ability of Azure Cognitive Search and Azure OpenAI Service, organizations can now make this streamlined expertise potential.

Mix Hybrid Retrieval and Semantic Rating to enhance generative AI purposes

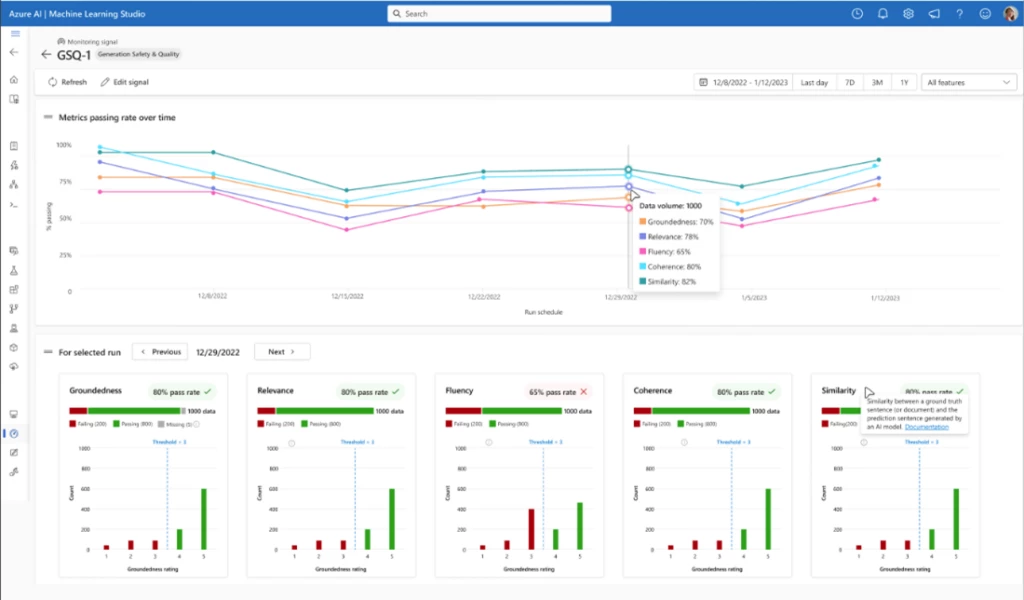

Talking of search, by way of intensive testing on each consultant buyer indexes and well-liked educational benchmarks, Microsoft discovered mixture of the next methods creates the simplest retrieval engine for a majority of buyer situations, and is very highly effective within the context of generative AI:

- Chunking lengthy kind content material

- Using hybrid retrieval (combining BM25 and vector search)

- Activating semantic rating

Any developer constructing generative AI purposes will wish to experiment with hybrid retrieval and reranking methods to enhance the accuracy of outcomes to please finish customers.

Enhance the effectivity of your Azure OpenAI Service software with Azure Cosmos DB vector search

We just lately expanded our documentation and tutorials with pattern code to assist prospects study extra in regards to the energy of mixing Azure Cosmos DB and Azure OpenAI Service. Making use of Azure Cosmos DB vector search capabilities to Azure OpenAI purposes allows you to retailer long run reminiscence and chat historical past, enhancing the standard and effectivity of your LLM answer for customers. It is because vector search permits you to effectively question again probably the most related context to personalize Azure OpenAI prompts in a token-efficient method. Storing vector embeddings alongside the information in an built-in answer minimizes the necessity to handle knowledge synchronization and helps speed up your time-to-market for AI app improvement.

See the total infographic.

Embrace the way forward for knowledge and AI at upcoming Microsoft occasions

Azure constantly improves as we hearken to our prospects and advance our platform for excellence in utilized knowledge and AI. We hope you’ll be part of us at one in all our upcoming occasions to find out about extra improvements coming to Azure and to community straight with Microsoft specialists and business friends.

- Enterprise scale open-source analytics on containers: Be a part of Arun Ulagaratchagan (CVP, Azure Knowledge), Kishore Chaliparambil (GM, Azure Knowledge), and Balaji Sankaran (GM, HDInsight) for a webinar on October third to study extra in regards to the newest developments in HDInsight. Microsoft will unveil a full-stack refresh with new open-source workloads, container-based structure, and pre-built Azure integrations. Learn the way to make use of our trendy platform to tune your analytics purposes for optimum prices and improved efficiency, and combine it with Microsoft Material to allow each position in your group.

- Microsoft Ignite is one in all our largest occasions of the yr for technical enterprise leaders, IT professionals, builders, and lovers. Be a part of us November 14-17, 2023 nearly or in-person, to listen to the most recent improvements round AI, study from product and companion specialists construct in-demand expertise, and join with the broader neighborhood.

[ad_2]

Source link