[ad_1]

|

With S3 Object Lambda, you need to use your individual code to course of knowledge retrieved from Amazon S3 as it’s returned to an utility. Over time, we added new capabilities to S3 Object Lambda, like the flexibility so as to add your individual code to S3 HEAD and LIST API requests, along with the assist for S3 GET requests that was obtainable at launch.

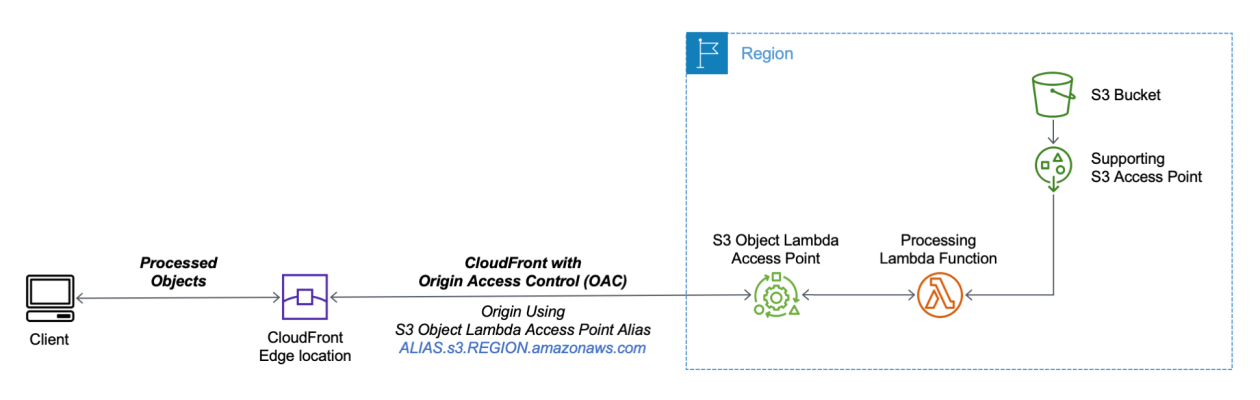

Right this moment, we’re launching aliases for S3 Object Lambda Entry Factors. Aliases at the moment are routinely generated when S3 Object Lambda Entry Factors are created and are interchangeable with bucket names anyplace you utilize a bucket title to entry knowledge saved in Amazon S3. Subsequently, your functions don’t have to find out about S3 Object Lambda and may contemplate the alias to be a bucket title.

Now you can use an S3 Object Lambda Entry Level alias as an origin on your Amazon CloudFront distribution to tailor or customise knowledge for finish customers. You need to use this to implement automated picture resizing or to tag or annotate content material as it’s downloaded. Many photos nonetheless use older codecs like JPEG or PNG, and you need to use a transcoding operate to ship photos in additional environment friendly codecs like WebP, BPG, or HEIC. Digital photos comprise metadata, and you may implement a operate that strips metadata to assist fulfill knowledge privateness necessities.

Let’s see how this works in apply. First, I’ll present a easy instance utilizing textual content that you may comply with alongside by simply utilizing the AWS Administration Console. After that, I’ll implement a extra superior use case processing photos.

Utilizing an S3 Object Lambda Entry Level because the Origin of a CloudFront Distribution

For simplicity, I’m utilizing the identical utility within the launch submit that adjustments all textual content within the unique file to uppercase. This time, I exploit the S3 Object Lambda Entry Level alias to arrange a public distribution with CloudFront.

I comply with the identical steps as within the launch submit to create the S3 Object Lambda Entry Level and the Lambda operate. As a result of the Lambda runtimes for Python three.Eight and later don’t embody the requests module, I replace the operate code to make use of urlopen from the Python Customary Library:

import boto3

from urllib.request import urlopen

s3 = boto3.shopper('s3')

def lambda_handler(occasion, context):

print(occasion)

object_get_context = occasion['getObjectContext']

request_route = object_get_context['outputRoute']

request_token = object_get_context['outputToken']

s3_url = object_get_context['inputS3Url']

# Get object from S3

response = urlopen(s3_url)

original_object = response.learn().decode('utf-Eight')

# Remodel object

transformed_object = original_object.higher()

# Write object again to S3 Object Lambda

s3.write_get_object_response(

Physique=transformed_object,

RequestRoute=request_route,

RequestToken=request_token)

returnTo check that that is working, I open the identical file from the bucket and thru the S3 Object Lambda Entry Level. Within the S3 console, I choose the bucket and a pattern file (referred to as s3.txt) that I uploaded earlier and select Open.

A brand new browser tab is opened (you would possibly have to disable the pop-up blocker in your browser), and its content material is the unique file with mixed-case textual content:

Amazon Easy Storage Service (Amazon S3) is an object storage service that gives...

I select Object Lambda Entry Factors from the navigation pane and choose the AWS Area I used earlier than from the dropdown. Then, I seek for the S3 Object Lambda Entry Level that I simply created. I choose the identical file as earlier than and select Open.

Within the new tab, the textual content has been processed by the Lambda operate and is now all in uppercase:

AMAZON SIMPLE STORAGE SERVICE (AMAZON S3) IS AN OBJECT STORAGE SERVICE THAT OFFERS...

Now that the S3 Object Lambda Entry Level is accurately configured, I can create the CloudFront distribution. Earlier than I do this, within the listing of S3 Object Lambda Entry Factors within the S3 console, I copy the Object Lambda Entry Level alias that has been routinely created:

Within the CloudFront console, I select Distributions within the navigation pane after which Create distribution. Within the Origin area, I exploit the S3 Object Lambda Entry Level alias and the Area. The complete syntax of the area is:

ALIAS.s3.REGION.amazonaws.com

S3 Object Lambda Entry Factors can’t be public, and I exploit CloudFront origin entry management (OAC) to authenticate requests to the origin. For Origin entry, I choose Origin entry management settings and select Create management setting. I write a reputation for the management setting and choose Signal requests and S3 within the Origin kind dropdown.

Now, my Origin entry management settings use the configuration I simply created.

To scale back the variety of requests going by S3 Object Lambda, I allow Origin Protect and select the closest Origin Protect Area to the Area I’m utilizing. Then, I choose the CachingOptimized cache coverage and create the distribution. Because the distribution is being deployed, I replace permissions for the assets utilized by the distribution.

Setting Up Permissions to Use an S3 Object Lambda Entry Level because the Origin of a CloudFront Distribution

First, the S3 Object Lambda Entry Level wants to present entry to the CloudFront distribution. Within the S3 console, I choose the S3 Object Lambda Entry Level and, within the Permissions tab, I replace the coverage with the next:

"Model": "2012-10-17",

"Assertion": [

]

The supporting entry level additionally wants to permit entry to CloudFront when referred to as through S3 Object Lambda. I choose the entry level and replace the coverage within the Permissions tab:

"Model": "2012-10-17",

"Id": "default",

"Assertion": [

"Sid": "s3objlambda",

"Effect": "Allow",

"Principal":

"Service": "cloudfront.amazonaws.com"

,

"Action": "s3:*",

"Resource": [

"arn:aws:s3:REGION:ACCOUNT:accesspoint/NAME",

"arn:aws:s3:REGION:ACCOUNT:accesspoint/NAME/object/*"

],

"Situation":

]

The S3 bucket wants to permit entry to the supporting entry level. I choose the bucket and replace the coverage within the Permissions tab:

"Model": "2012-10-17",

"Assertion": [

]

Lastly, CloudFront wants to have the ability to invoke the Lambda operate. Within the Lambda console, I select the Lambda operate utilized by S3 Object Lambda, after which, within the Configuration tab, I select Permissions. Within the Useful resource-based coverage statements part, I select Add permissions and choose AWS Account. I enter a novel Assertion ID. Then, I enter cloudfront.amazonaws.com as Principal and choose lambda:InvokeFunction from the Motion dropdown and Save. We’re working to simplify this step sooner or later. I’ll replace this submit when that’s obtainable.

Testing the CloudFront Distribution

When the distribution has been deployed, I check that the setup is working with the identical pattern file I used earlier than. Within the CloudFront console, I choose the distribution and duplicate the Distribution area title. I can use the browser and enter https://DISTRIBUTION_DOMAIN_NAME/s3.txt within the navigation bar to ship a request to CloudFront and get the file processed by S3 Object Lambda. To rapidly get all the information, I exploit curl with the -i choice to see the HTTP standing and the headers within the response:

It really works! As anticipated, the content material processed by the Lambda operate is all uppercase. As a result of that is the primary invocation for the distribution, it has not been returned from the cache (x-cache: Miss from cloudfront). The request went by S3 Object Lambda to course of the file utilizing the Lambda operate I offered.

Let’s attempt the identical request once more:

This time the content material is returned from the CloudFront cache (x-cache: Hit from cloudfront), and there was no additional processing by S3 Object Lambda. Through the use of S3 Object Lambda because the origin, the CloudFront distribution serves content material that has been processed by a Lambda operate and will be cached to cut back latency and optimize prices.

Resizing Photos Utilizing S3 Object Lambda and CloudFront

As I discussed at the start of this submit, one of many use circumstances that may be applied utilizing S3 Object Lambda and CloudFront is picture transformation. Let’s create a CloudFront distribution that may dynamically resize a picture by passing the specified width and peak as question parameters (w and h respectively). For instance:

For this setup to work, I have to make two adjustments to the CloudFront distribution. First, I create a brand new cache coverage to incorporate question parameters within the cache key. Within the CloudFront console, I select Insurance policies within the navigation pane. Within the Cache tab, I select Create cache coverage. Then, I enter a reputation for the cache coverage.

Within the Question settings of the Cache key settings, I choose the choice to Embody the next question parameters and add w (for the width) and h (for the peak).

Then, within the Behaviors tab of the distribution, I choose the default habits and select Edit.

There, I replace the Cache key and origin requests part:

- Within the Cache coverage, I exploit the brand new cache coverage to incorporate the

wandhquestion parameters within the cache key. - Within the Origin request coverage, use the

AllViewerExceptHostHeadermanaged coverage to ahead question parameters to the origin.

Now I can replace the Lambda operate code. To resize photos, this operate makes use of the Pillow module that must be packaged with the operate when it’s uploaded to Lambda. You’ll be able to deploy the operate utilizing a device just like the AWS SAM CLI or the AWS CDK. In comparison with the earlier instance, this operate additionally handles and returns HTTP errors, reminiscent of when content material shouldn’t be discovered within the bucket.

import io

import boto3

from urllib.request import urlopen, HTTPError

from PIL import Picture

from urllib.parse import urlparse, parse_qs

s3 = boto3.shopper('s3')

def lambda_handler(occasion, context):

print(occasion)

object_get_context = occasion['getObjectContext']

request_route = object_get_context['outputRoute']

request_token = object_get_context['outputToken']

s3_url = object_get_context['inputS3Url']

# Get object from S3

attempt:

original_image = Picture.open(urlopen(s3_url))

besides HTTPError as err:

s3.write_get_object_response(

StatusCode=err.code,

ErrorCode="HTTPError",

ErrorMessage=err.motive,

RequestRoute=request_route,

RequestToken=request_token)

return

# Get width and peak from question parameters

user_request = occasion['userRequest']

url = user_request['url']

parsed_url = urlparse(url)

query_parameters = parse_qs(parsed_url.question)

attempt:

width, peak = int(query_parameters['w'][0]), int(query_parameters['h'][0])

besides (KeyError, ValueError):

width, peak = zero, zero

# Remodel object

if width > zero and peak > zero:

transformed_image = original_image.resize((width, peak), Picture.ANTIALIAS)

else:

transformed_image = original_image

transformed_bytes = io.BytesIO()

transformed_image.save(transformed_bytes, format="JPEG")

# Write object again to S3 Object Lambda

s3.write_get_object_response(

Physique=transformed_bytes.getvalue(),

RequestRoute=request_route,

RequestToken=request_token)

returnI add an image I took of the Trevi Fountain within the supply bucket. To start out, I generate a small thumbnail (200 by 150 pixels).

https://DISTRIBUTION_DOMAIN_NAME/trevi-fountain.jpeg?w=200&h=150

Now, I ask for a barely bigger model (400 by 300 pixels):

It really works as anticipated. The primary invocation with a selected dimension is processed by the Lambda operate. Additional requests with the identical width and peak are served from the CloudFront cache.

Availability and Pricing

Aliases for S3 Object Lambda Entry Factors can be found in the present day in all industrial AWS Areas. There isn’t a extra price for aliases. With S3 Object Lambda, you pay for the Lambda compute and request prices required to course of the info, and for the info S3 Object Lambda returns to your utility. You additionally pay for the S3 requests which can be invoked by your Lambda operate. For extra info, see Amazon S3 Pricing.

Aliases at the moment are routinely generated when an S3 Object Lambda Entry Level is created. For current S3 Object Lambda Entry Factors, aliases are routinely assigned and prepared to be used.

It’s now simpler to make use of S3 Object Lambda with current functions, and aliases open many new potentialities. For instance, you need to use aliases with CloudFront to create an internet site that converts content material in Markdown to HTML, resizes and watermarks photos, or masks personally identifiable info (PII) from textual content, photos, and paperwork.

Customise content material on your finish customers utilizing S3 Object Lambda with CloudFront.

— Danilo

[ad_2]

Source link