[ad_1]

|

Lots of our clients (similar to Formulation One, Honeycomb, Intuit, SmugMug, and Snap Inc.) use the Arm-based AWS Graviton2 processor for his or her workloads and luxuriate in higher worth efficiency. Beginning at this time, you may get the identical advantages on your AWS Lambda features. Now you can configure new and current features to run on x86 or Arm/Graviton2 processors.

With this alternative, it can save you cash in two methods. First, your features run extra effectively as a result of Graviton2 structure. Second, you pay much less for the time that they run. In reality, Lambda features powered by Graviton2 are designed to ship as much as 19 p.c higher efficiency at 20 p.c decrease price.

With Lambda, you might be charged based mostly on the variety of requests on your features and the period (the time it takes on your code to execute) with millisecond granularity. For features utilizing the Arm/Graviton2 structure, period prices are 20 p.c decrease than the present pricing for x86. The identical 20 p.c discount additionally applies to period prices for features utilizing Provisioned Concurrency.

Along with the value discount, features utilizing the Arm structure profit from the efficiency and safety constructed into the Graviton2 processor. Workloads utilizing multithreading and multiprocessing, or performing many I/O operations, can expertise decrease execution time and, as a consequence, even decrease prices. That is notably helpful now that you need to use Lambda features with as much as 10 GB of reminiscence and 6 vCPUs. For instance, you may get higher efficiency for internet and cellular backends, microservices, and knowledge processing programs.

In case your features don’t use architecture-specific binaries, together with of their dependencies, you may swap from one structure to the opposite. That is typically the case for a lot of features utilizing interpreted languages similar to Node.js and Python or features compiled to Java bytecode.

All Lambda runtimes constructed on high of Amazon Linux 2, together with the customized runtime, are supported on Arm, excluding Node.js 10 that has reached finish of help. If in case you have binaries in your operate packages, you should rebuild the operate code for the structure you wish to use. Features packaged as container photographs must be constructed for the structure (x86 or Arm) they’ll use.

To measure the distinction between architectures, you may create two variations of a operate, one for x86 and one for Arm. You possibly can then ship site visitors to the operate through an alias utilizing weights to distribute site visitors between the 2 variations. In Amazon CloudWatch, efficiency metrics are collected by operate variations, and you may take a look at key indicators (similar to period) utilizing statistics. You possibly can then examine, for instance, common and p99 period between the 2 architectures.

You too can use operate variations and weighted aliases to regulate the rollout in manufacturing. For instance, you may deploy the brand new model to a small quantity of invocations (similar to 1 p.c) after which improve as much as 100 p.c for a whole deployment. Throughout rollout, you may decrease the load or set it to zero in case your metrics present one thing suspicious (similar to a rise in errors).

Let’s see how this new functionality works in follow with a number of examples.

Altering Structure for Features with No Binary Dependencies

When there aren’t any binary dependencies, altering the structure of a Lambda operate is like flipping a swap. For instance, a while in the past, I constructed a quiz app with a Lambda operate. With this app, you may ask and reply questions utilizing an internet API. I exploit an Amazon API Gateway HTTP API to set off the operate. Right here’s the Node.js code together with a number of pattern questions in the beginning:

const questions = [

question:

"Are there more synapses (nerve connections) in your brain or stars in our galaxy?",

answers: [

"More stars in our galaxy.",

"More synapses (nerve connections) in your brain.",

"They are about the same.",

],

correctAnswer: 1,

,

query:

"Did Cleopatra stay nearer in time to the launch of the iPhone or to the constructing of the Giza pyramids?",

solutions: [

"To the launch of the iPhone.",

"To the building of the Giza pyramids.",

"Cleopatra lived right in between those events.",

],

correctAnswer: zero,

,

query:

"Did mammoths nonetheless roam the earth whereas the pyramids had been being constructed?",

solutions: [

"No, they were all exctint long before.",

"Mammooths exctinction is estimated right about that time.",

"Yes, some still survived at the time.",

],

correctAnswer: 2,

,

];

exports.handler = async (occasion) => {

console.log(occasion);

const technique = occasion.requestContext.http.technique;

const path = occasion.requestContext.http.path;

const splitPath = path.change(/^/+|/+$/g, "").break up("/");

console.log(technique, path, splitPath);

var response = ;

if (splitPath[0] == "questions") {

if (splitPath.size == 1)

console.log(Object.keys(questions));

response.physique = JSON.stringify(Object.keys(questions));

else {

const questionId = splitPath[1];

const query = questions[questionId];

if (query === undefined)

response = ;

else {

if (splitPath.size == 2) else

const answerId = splitPath[2];

if (answerId == query.correctAnswer)

response.physique = JSON.stringify();

else

response.physique = JSON.stringify( appropriate: false );

}

}

}

return response;

};To begin my quiz, I ask for the checklist of query IDs. To take action, I exploit curl with an HTTP GET on the /questions endpoint:

Then, I ask extra info on a query by including the ID to the endpoint:

I plan to make use of this operate in manufacturing. I anticipate many invocations and search for choices to optimize my prices. Within the Lambda console, I see that this operate is utilizing the x86_64 structure.

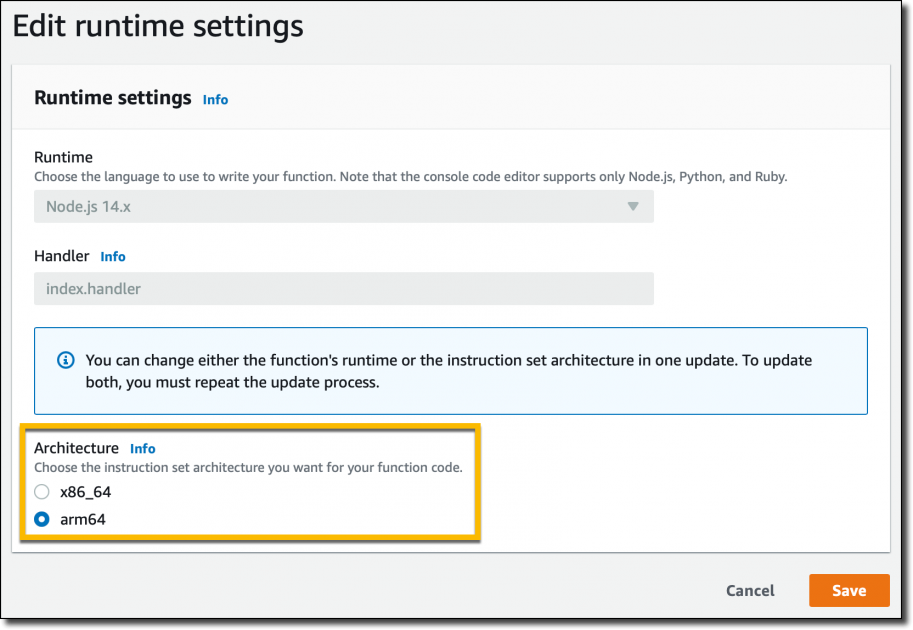

As a result of this operate will not be utilizing any binaries, I swap structure to arm64 and profit from the decrease pricing.

The change in structure doesn’t change the way in which the operate is invoked or communicates its response again. Which means the mixing with the API Gateway, in addition to integrations with different functions or instruments, aren’t affected by this transformation and proceed to work as earlier than.

I proceed my quiz with no trace that the structure used to run the code has modified within the backend. I reply again to the earlier query by including the variety of the reply (ranging from zero) to the query endpoint:

That’s appropriate! Cleopatra lived nearer in time to the launch of the iPhone than the constructing of the Giza pyramids. Whereas I’m digesting this piece of data, I notice that I accomplished the migration of the operate to Arm and optimized my prices.

Altering Structure for Features Packaged Utilizing Container Photographs

After we launched the aptitude to bundle and deploy Lambda features utilizing container photographs, I did a demo with a Node.js operate producing a PDF file with the PDFKit module. Let’s see methods to migrate this operate to Arm.

Every time it’s invoked, the operate creates a brand new PDF mail containing random knowledge generated by the faker.js module. The output of the operate is utilizing the syntax of the Amazon API Gateway to return the PDF file utilizing Base64 encoding. For comfort, I replicate the code (app.js) of the operate right here:

const PDFDocument = require('pdfkit');

const faker = require('faker');

const getStream = require('get-stream');

exports.lambdaHandler = async (occasion) =>

const doc = new PDFDocument();

const randomName = faker.identify.findName();

doc.textual content(randomName, );

doc.textual content(faker.tackle.streetAddress(), );

doc.textual content(faker.tackle.secondaryAddress(), );

doc.textual content(faker.tackle.zipCode() + ' ' + faker.tackle.metropolis(), );

doc.moveDown();

doc.textual content('Expensive ' + randomName + ',');

doc.moveDown();

for(let i = zero; i < three; i++)

doc.textual content(faker.lorem.paragraph());

doc.moveDown();

doc.textual content(faker.identify.findName(), );

doc.finish();

pdfBuffer = await getStream.buffer(doc);

pdfBase64 = pdfBuffer.toString('base64');

const response =

statusCode: 200,

headers:

'Content material-Size': Buffer.byteLength(pdfBase64),

'Content material-Kind': 'software/pdf',

'Content material-disposition': 'attachment;filename=take a look at.pdf'

,

isBase64Encoded: true,

physique: pdfBase64

;

return response;

;To run this code, I want the pdfkit, faker, and get-stream npm modules. These packages and their variations are described within the bundle.json and package-lock.json information.

I replace the FROM line within the Dockerfile to make use of an AWS base picture for Lambda for the Arm structure. Given the possibility, I additionally replace the picture to make use of Node.js 14 (I used to be utilizing Node.js 12 on the time). That is the one change I want to change structure.

For the following steps, I comply with the publish I discussed beforehand. This time I exploit random-letter-arm for the identify of the container picture and for the identify of the Lambda operate. First, I construct the picture:

Then, I examine the picture to examine that it’s utilizing the fitting structure:

To make sure the operate works with the brand new structure, I run the container domestically.

As a result of the container picture consists of the Lambda Runtime Interface Emulator, I can take a look at the operate domestically:

It really works! The response is a JSON doc containing a base64-encoded response for the API Gateway:

Assured that my Lambda operate works with the arm64 structure, I create a brand new Amazon Elastic Container Registry repository utilizing the AWS Command Line Interface (CLI):

I tag the picture and push it to the repo:

Within the Lambda console, I create the random-letter-arm operate and choose the choice to create the operate from a container picture.

I enter the operate identify, browse my ECR repositories to pick the random-letter-arm container picture, and select the arm64 structure.

I full the creation of the operate. Then, I add the API Gateway as a set off. For simplicity, I go away the authentication of the API open.

Now, I click on on the API endpoint a number of instances and obtain some PDF mails generated with random knowledge:

The migration of this Lambda operate to Arm is full. The method will differ in case you have particular dependencies that don’t help the goal structure. The power to check your container picture domestically helps you discover and repair points early within the course of.

Evaluating Totally different Architectures with Perform Variations and Aliases

To have a operate that makes some significant use of the CPU, I exploit the next Python code. It computes all prime numbers as much as a restrict handed as a parameter. I’m not utilizing the absolute best algorithm right here, that may be the sieve of Eratosthenes, however it’s compromise for an environment friendly use of reminiscence. To have extra visibility, I add the structure utilized by the operate to the response of the operate.

import json

import math

import platform

import timeit

def primes_up_to(n):

primes = []

for i in vary(2, n+1):

is_prime = True

sqrt_i = math.isqrt(i)

for p in primes:

if p > sqrt_i:

break

if i % p == zero:

is_prime = False

break

if is_prime:

primes.append(i)

return primes

def lambda_handler(occasion, context):

start_time = timeit.default_timer()

N = int(occasion['queryStringParameters']['max'])

primes = primes_up_to(N)

stop_time = timeit.default_timer()

elapsed_time = stop_time - start_time

response =

return I create two operate variations utilizing completely different architectures.

I exploit a weighted alias with 50% weight on the x86 model and 50% weight on the Arm model to distribute invocations evenly. When invoking the operate by means of this alias, the 2 variations working on the 2 completely different architectures are executed with the identical chance.

I create an API Gateway set off for the operate alias after which generate some load utilizing a number of terminals on my laptop computer. Every invocation computes prime numbers as much as a million. You possibly can see within the output how two completely different architectures are used to run the operate.

Throughout these executions, Lambda sends metrics to CloudWatch and the operate model (ExecutedVersion) is saved as one of many dimensions.

To raised perceive what is occurring, I create a CloudWatch dashboard to watch the p99 period for the 2 architectures. On this approach, I can examine the efficiency of the 2 environments for this operate and make an knowledgeable resolution on which structure to make use of in manufacturing.

For this explicit workload, features are working a lot sooner on the Graviton2 processor, offering a greater consumer expertise and far decrease prices.

Evaluating Totally different Architectures with Lambda Energy Tuning

The AWS Lambda Energy Tuning open-source challenge, created by my buddy Alex Casalboni, runs your features utilizing completely different settings and suggests a configuration to attenuate prices and/or maximize efficiency. The challenge has recently been updated to let you compare two results on the same chart. This is useful to match two variations of the identical operate, one utilizing x86 and the opposite Arm.

For instance, this chart compares x86 and Arm/Graviton2 outcomes for the operate computing prime numbers I used earlier within the publish:

The operate is utilizing a single thread. In reality, the bottom period for each architectures is reported when reminiscence is configured with 1.eight GB. Above that, Lambda features have entry to greater than 1 vCPU, however on this case, the operate can’t use the extra energy. For a similar cause, prices are secure with reminiscence as much as 1.eight GB. With extra reminiscence, prices improve as a result of there aren’t any extra efficiency advantages for this workload.

I take a look at the chart and configure the operate to make use of 1.eight GB of reminiscence and the Arm structure. The Graviton2 processor is clearly offering higher efficiency and decrease prices for this compute-intensive operate.

Availability and Pricing

You should use Lambda Features powered by Graviton2 processor at this time in US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Frankfurt), Europe (Eire), EU (London), Asia Pacific (Mumbai), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo).

The next runtimes working on high of Amazon Linux 2 are supported on Arm:

- Node.js 12 and 14

- Python three.eight and three.9

- Java eight (

java8.al2) and 11 - .NET Core three.1

- Ruby 2.7

- Customized Runtime (

offered.al2)

You possibly can handle Lambda Features powered by Graviton2 processor utilizing AWS Serverless Software Mannequin (SAM) and AWS Cloud Improvement Equipment (AWS CDK). Help can be out there by means of many AWS Lambda Companions similar to AntStack, Test Level, Cloudwiry, Contino, Coralogix, Datadog, Lumigo, Pulumi, Slalom, Sumo Logic, Thundra, and Xerris.

Lambda features utilizing the Arm/Graviton2 structure present as much as 34 p.c worth efficiency enchancment. The 20 p.c discount in period prices additionally applies when utilizing Provisioned Concurrency. You possibly can additional scale back your prices by as much as 17 p.c with Compute Financial savings Plans. Lambda features powered by Graviton2 are included within the AWS Free Tier as much as the prevailing limits. For extra info, see the AWS Lambda pricing web page.

You could find assist to optimize your workloads for the AWS Graviton2 processor within the Getting began with AWS Graviton repository.

Begin working your Lambda features on Arm at this time.

— Danilo

[ad_2]

Source link