[ad_1]

|

After we launched Amazon CloudWatch again in 2009 (New Options for Amazon EC2: Elastic Load Balancing, Auto Scaling, and Amazon CloudWatch), it tracked efficiency metrics (CPU load, Disk I/O, and community I/O) for EC2 cases, rolled them up at one-minute intervals, and saved them for 2 weeks. At the moment it was used to observe occasion well being and to drive Auto Scaling. Right this moment, CloudWatch is a much more complete and complex service. A few of the most up-to-date additions embody metrics with 1-minute granularity for all EBS quantity sorts, CloudWatch Lambda Insights, and the Metrics Explorer.

AWS Companions have used the CloudWatch metrics to create all kinds of monitoring, alerting, and price administration instruments. With a purpose to entry the metrics the companions created polling fleets that referred to as the ListMetrics and GetMetricDatafeatures for every of their prospects.

These fleets should scale in proportion to the variety of AWS assets created by every of the companions’ prospects and the variety of CloudWatch metrics which are retrieved for every useful resource. This polling is solely undifferentiated heavy lifting that every companion should do. It provides no worth, and takes valuable time that could possibly be higher invested in different methods.

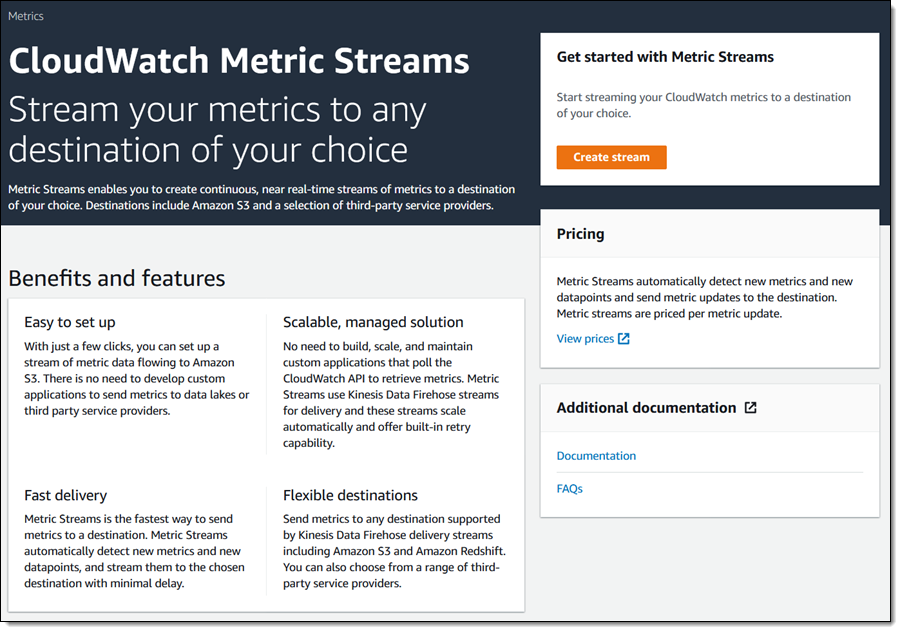

New Metric Streams With a purpose to make it simpler for AWS Companions and others to realize entry to CloudWatch metrics sooner and at scale, we’re launching CloudWatch Metric Streams. As a substitute of polling (which may end up in 5 to 10 minutes of latency), metrics are delivered to a Kinesis Information Firehose stream. That is extremely scalable and way more environment friendly, and helps two vital use circumstances:

With a purpose to make it simpler for AWS Companions and others to realize entry to CloudWatch metrics sooner and at scale, we’re launching CloudWatch Metric Streams. As a substitute of polling (which may end up in 5 to 10 minutes of latency), metrics are delivered to a Kinesis Information Firehose stream. That is extremely scalable and way more environment friendly, and helps two vital use circumstances:

Accomplice Companies – You possibly can stream metrics to a Kinesis Information Firehose that writes information to an endpoint owned by an AWS Accomplice. This permits companions to scale down their polling fleets considerably, and lets them construct instruments that may reply extra shortly when key value or efficiency metrics change in sudden methods.

Information Lake – You possibly can stream metrics to a Kinesis Information Firehose of your individual. From there you may apply any desired information transformations, after which push the metrics into Amazon Easy Storage Service (S3) or Amazon Redshift. You then have the total array of AWS analytics instruments at your disposal: S3 Choose, Amazon SageMaker, Amazon EMR, Amazon Athena, Amazon Kinesis Information Analytics, and extra. Our prospects do that to mix billing and efficiency information with a purpose to measure & enhance value optimization, useful resource efficiency, and useful resource utilization.

CloudWatch Metric Streams are totally managed and really simple to arrange. Streams can scale to deal with any quantity of metrics, with supply to the vacation spot inside two or three minutes. You possibly can select to ship all obtainable metrics to every stream that you simply create, or you may opt-in to any of the obtainable AWS (EC2, S3, and so forth) or customized namespaces.

As soon as a stream has been arrange, metrics begin to movement inside a minute or two. The movement will be stopped and restarted later if needed, which will be useful for testing and debugging. While you arrange a stream you select between the binary Open Telemetry zero.7 format, and the human-readable JSON format.

Every Metric Stream resides in a specific AWS area and delivers metrics to a single vacation spot. If you wish to ship metrics to a number of companions, you will have to create a Metric Stream for each. In case you are making a centralized information lake that spans a number of AWS accounts and/or areas, you will have to arrange some IAM roles (see Controlling Entry with Amazon Kinesis Information Firehose for extra info).

Making a Metric Stream

Let’s check out two methods to make use of a Metric Stream. First, I’ll use the Fast S3 setup choice to ship information to a Kinesis Information Firehose and from there to S3. Second, I’ll use a Firehose that writes to an endpoint at AWS Accomplice New Relic.

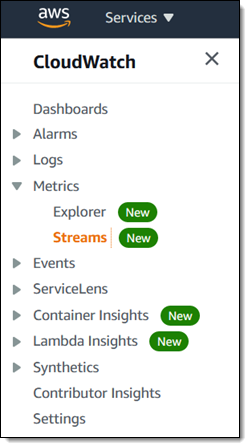

I open the CloudWatch Console, choose the specified area, and click on Streams within the left-side navigation. I evaluate the web page, and click on Create stream to proceed:

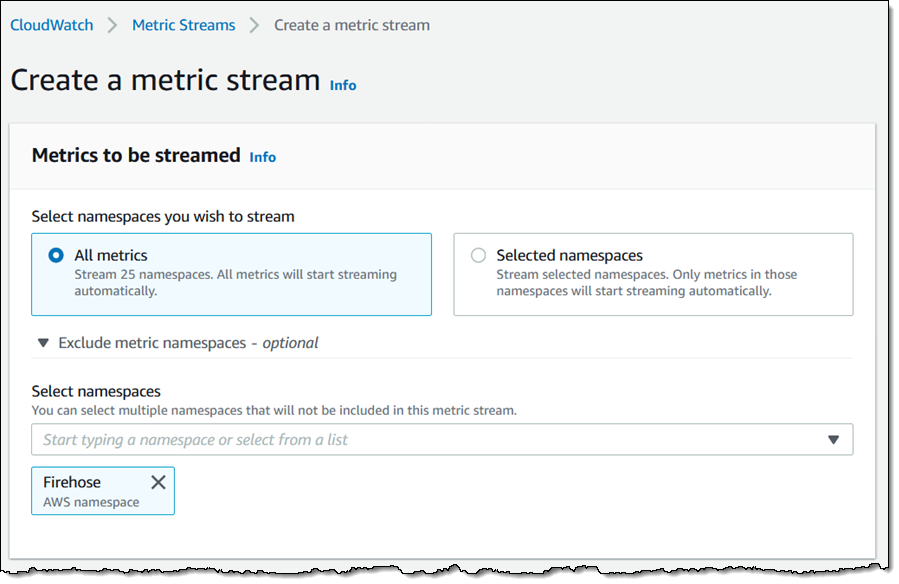

I select the metrics to stream. I can choose All metrics after which exclude those who I don’t want, or I can click on Chosen namespaces and embody those who I would like. I’ll go for All, however exclude Firehose:

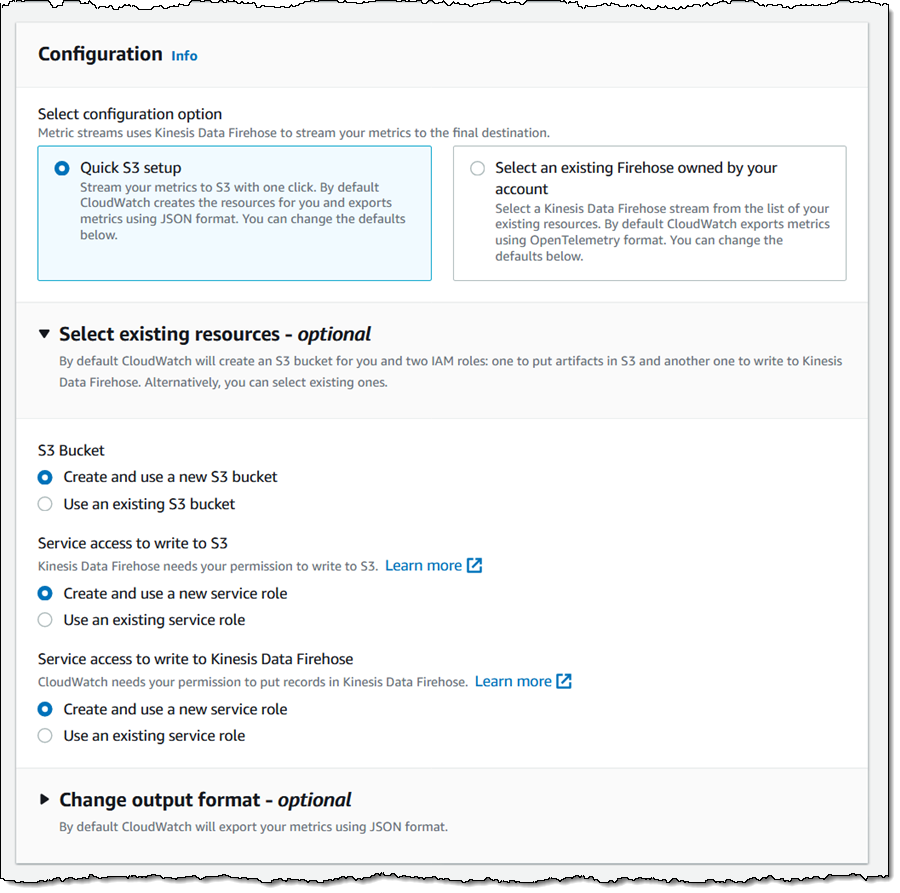

I choose Fast S3 setup, and depart the opposite configuration settings on this part unchanged (I expanded it in order that you could possibly see the entire choices which are obtainable to you):

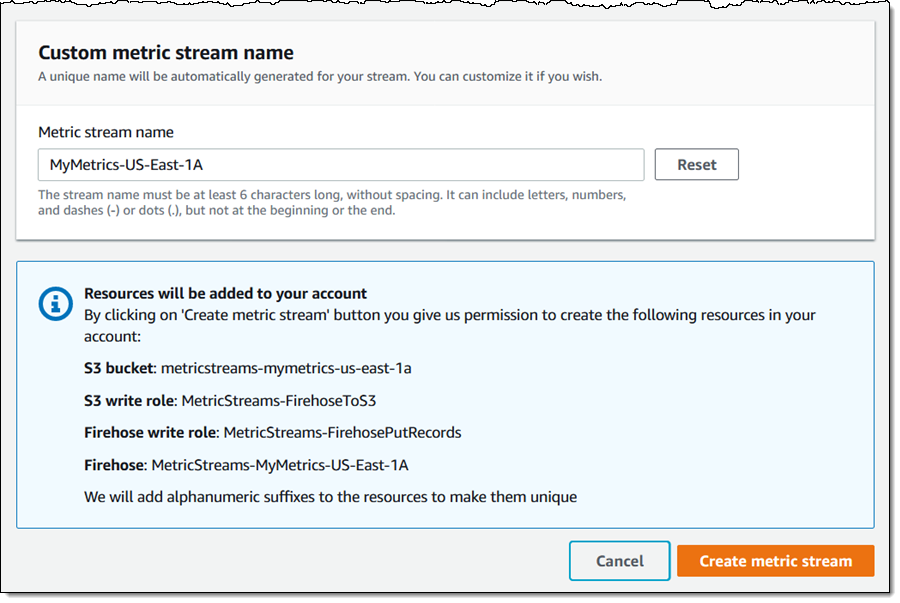

Then I enter a reputation (MyMetrics-US-East-1A) for my stream, verify that I perceive the assets that will likely be created, and click on Create metric stream:

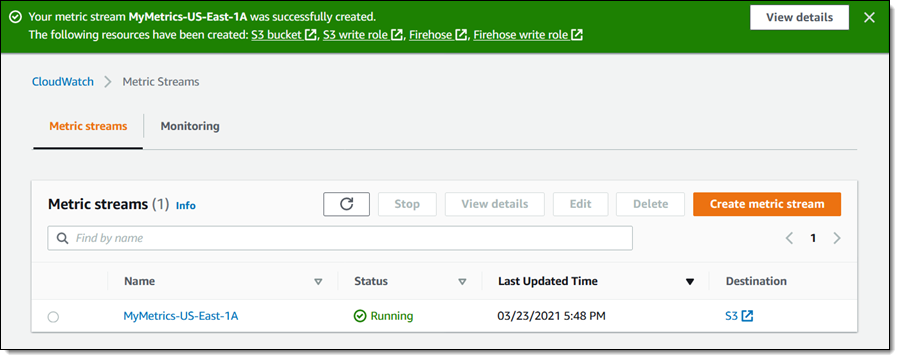

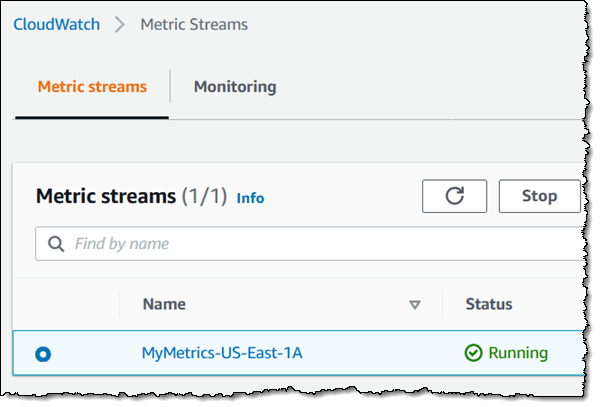

My stream is created and energetic inside seconds:

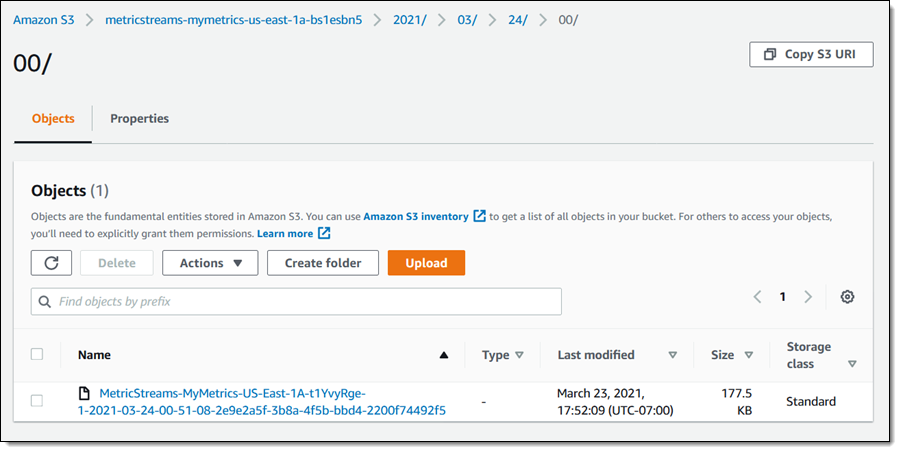

Objects start to seem within the S3 bucket inside a minute or two:

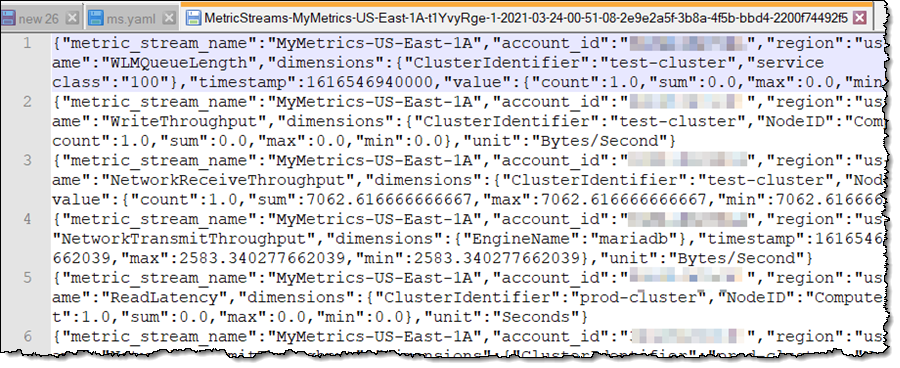

I can analyze my metrics utilizing any of the instruments that I listed above, or I can merely have a look at the uncooked information:

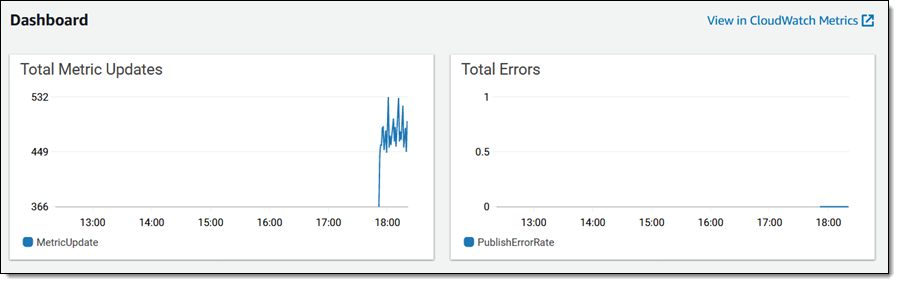

Every Metric Stream generates its personal set of CloudWatch metrics:

I can cease a working stream:

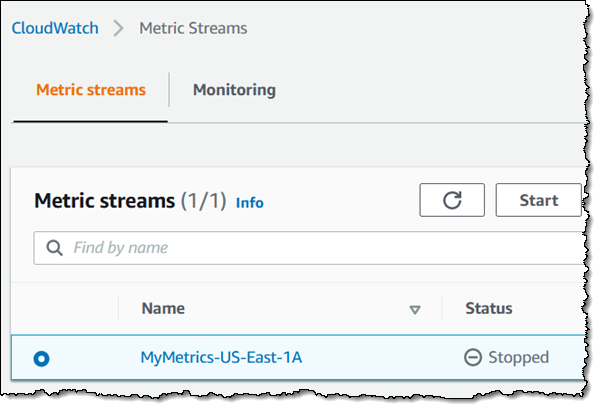

After which begin it:

I may create a Metric Stream utilizing a CloudFormation template. Right here’s an excerpt:

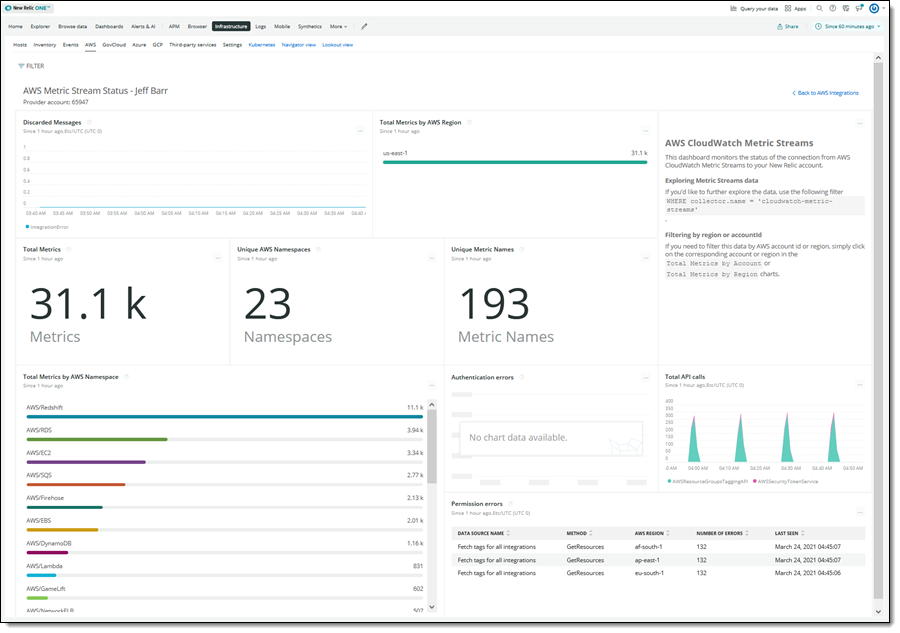

Now let’s check out the Accomplice-style use case! The crew at New Relic set me up with a CloudFormation template that created the required IAM roles and the Metric Stream. I merely entered my API key and an S3 bucket title and the template did the entire heavy lifting. Right here’s what I noticed:

Issues to Know

And that’s about it! Listed here are a few issues to bear in mind:

Areas – Metric Streams are actually obtainable in all business AWS Areas, excluding the AWS China (Beijing) Area and the AWS China (Ningxia) Area. As famous earlier, you will have to create a Metric Stream in every desired account and area (this can be a nice use case for CloudFormation Stacksets).

Pricing – You pay $zero.003 for each 1000 metric updates, and for any costs related to the Kinesis Information Firehose. To study extra, try the pricing web page.

Metrics – CloudWatch Metric Streams is appropriate with all CloudWatch metrics, however doesn’t ship metrics which have a timestamp that’s greater than two hours previous. This consists of S3 every day storage metrics and among the billing metrics.

Accomplice Companies

We designed this function with the objective of constructing it simpler & extra environment friendly for AWS Companions together with Datadog, Dynatrace, New Relic, Splunk, and Sumo Logic to get entry to metrics in order that the companions can construct even higher instruments. We’ve been working with these companions to assist them get began with CloudWatch Metric Streams. Listed here are among the weblog posts that they wrote with a purpose to share their experiences. (I’m updating this text with hyperlinks as they’re revealed.)

Now Obtainable

CloudWatch Metric Streams is on the market now and you should use it to stream metrics to a Kinesis Information Firehose of your individual or an AWS Companions. For extra info, try the documentation and ship suggestions to the AWS discussion board for Amazon CloudWatch.

— Jeff;

[ad_2]

Source link