[ad_1]

Azure empowers clever providers like Microsoft Copilot, Bing, and Azure OpenAI Service which have captured our creativeness in latest days. These providers, facilitating varied purposes like Microsoft Workplace 365, chatbots, and search engines like google with generative AI, owe their magic to giant language fashions (LLMs). Whereas the newest LLMs are transcendental, bringing a generational change in how we apply synthetic intelligence in our day by day lives and cause about its evolution, now we have merely scratched the floor. Creating extra succesful, honest, foundational LLMs that devour and current info extra precisely is important.

How Microsoft maximizes the facility of LLMs

Nevertheless, creating new LLMs or enhancing the accuracy of present ones isn’t any straightforward feat. To create and prepare improved variations of LLMs, supercomputers with large computational capabilities are required. It’s paramount that each the hardware and software program in these supercomputers are utilized effectively at scale, not leaving efficiency on the desk. That is the place the sheer scale of the supercomputing infrastructure in Azure cloud shines and setting a brand new scale document in LLM coaching issues.

Prospects want dependable and performant infrastructure to carry probably the most refined AI use instances to market in document time. Our goal is to construct state-of-the-art infrastructure and meet these calls for. The most recent MLPerf™ Three.1 Coaching outcomes1 are a testomony to our unwavering dedication to constructing high-quality and high-performance methods within the cloud to realize unparalleled effectivity in coaching LLMs at scale. The thought right here is to make use of large workloads to emphasize each element of the system and speed up our construct course of to realize top quality.

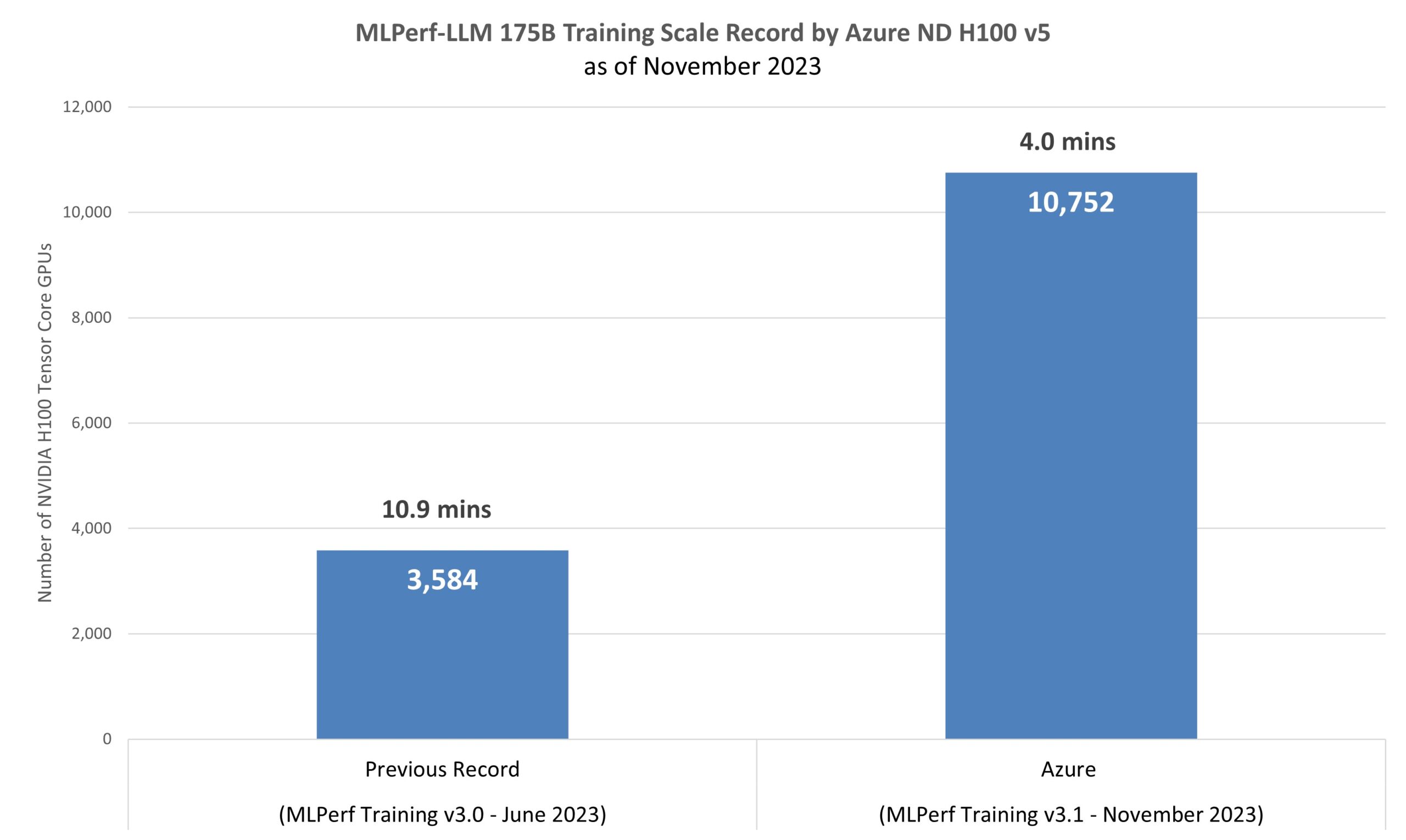

The GPT-Three LLM mannequin and its 175 billion parameters have been educated to completion in 4 minutes on 1,344 ND H100 v5 digital machines (VMs), which signify 10,752 NVIDIA H100 Tensor Core GPUs, related by the NVIDIA Quantum-2 InfiniBand networking platform (as proven in Determine 1). This coaching workload makes use of near real-world datasets and restarts from 2.four terabytes of checkpoints performing carefully a manufacturing LLM coaching situation. The workload stresses the H100 GPUs Tensor Cores, direct-attached Non-Unstable Reminiscence Specific disks, and the NVLink interconnect that gives quick communication to the high-bandwidth reminiscence within the GPUs and cross-node 400Gb/s InfiniBand cloth.

“Azure’s submission, the biggest within the historical past of MLPerf Coaching, demonstrates the extraordinary progress now we have made in optimizing the size of coaching. MLCommons’ benchmarks showcase the prowess of contemporary AI infrastructure and software program, underlining the continual developments which were achieved, finally propelling us towards much more highly effective and environment friendly AI methods.”—David Kanter, Govt Director of MLCommons

Microsoft’s commitment to efficiency

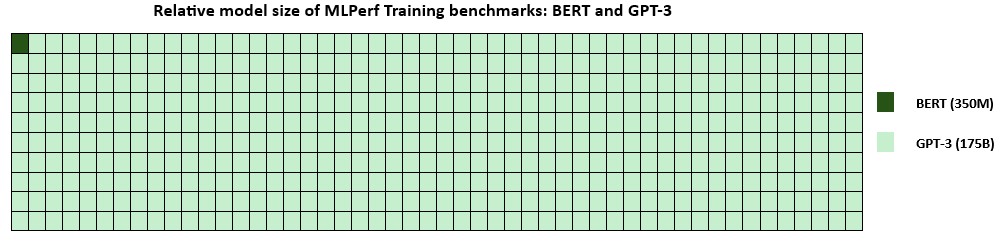

In March 2023, Microsoft launched the ND H100 v5-series which accomplished coaching a 350 million parameter Bidirectional Encoder Representations from Transformers (BERT) language mannequin in 5.four minutes, beating our present document. This resulted in a 4 instances enchancment in time to coach BERT inside simply 18 months, highlighting our steady endeavor to carry the very best efficiency to our customers.

As we speak’s outcomes are with GPT-Three, a big language mannequin within the MLPerf Coaching benchmarking suite, that includes 175 billion parameters, a exceptional 500 instances bigger than the beforehand benchmarked BERT mannequin (determine 2). The most recent coaching time from Azure reached a 2.7x enchancment in comparison with the earlier document from MLPerf Coaching v3.Zero. The v3.1 submission underscores the flexibility to lower coaching time and price by optimizing a mannequin that precisely represents present AI workloads.

The facility of virtualization

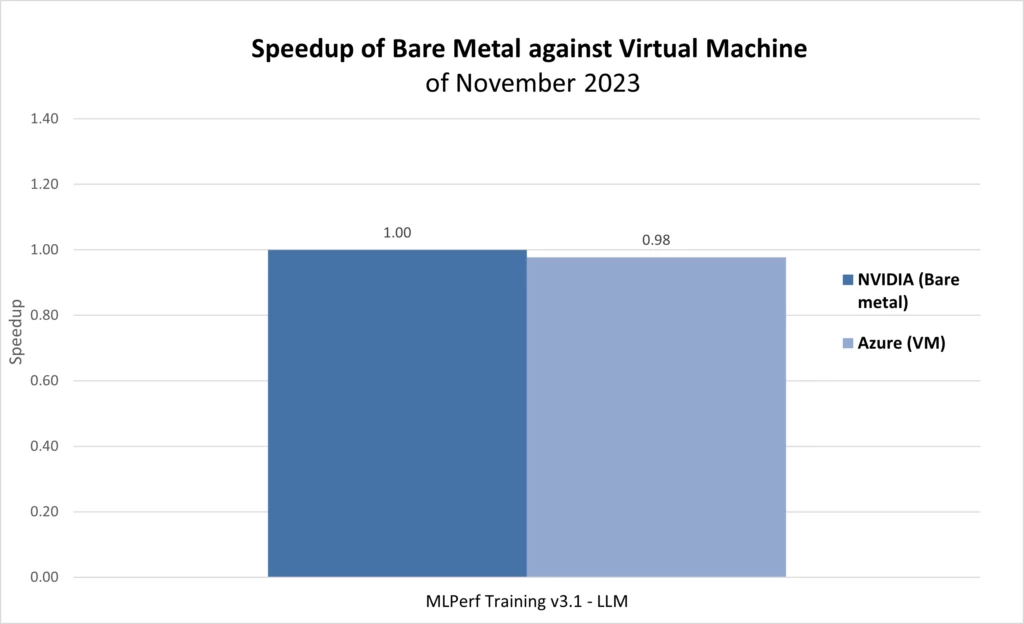

NVIDIA’s submission to the MLPerf Coaching v3.1 LLM benchmark on 10,752 NVIDIA H100 Tensor Core GPUs achieved a coaching time of three.92 minutes. This quantities to only a 2 % improve within the coaching time in Azure VMs in comparison with the NVIDIA bare-metal submission, which has the best-in-class efficiency of digital machines throughout all choices of HPC cases within the cloud (determine Three).

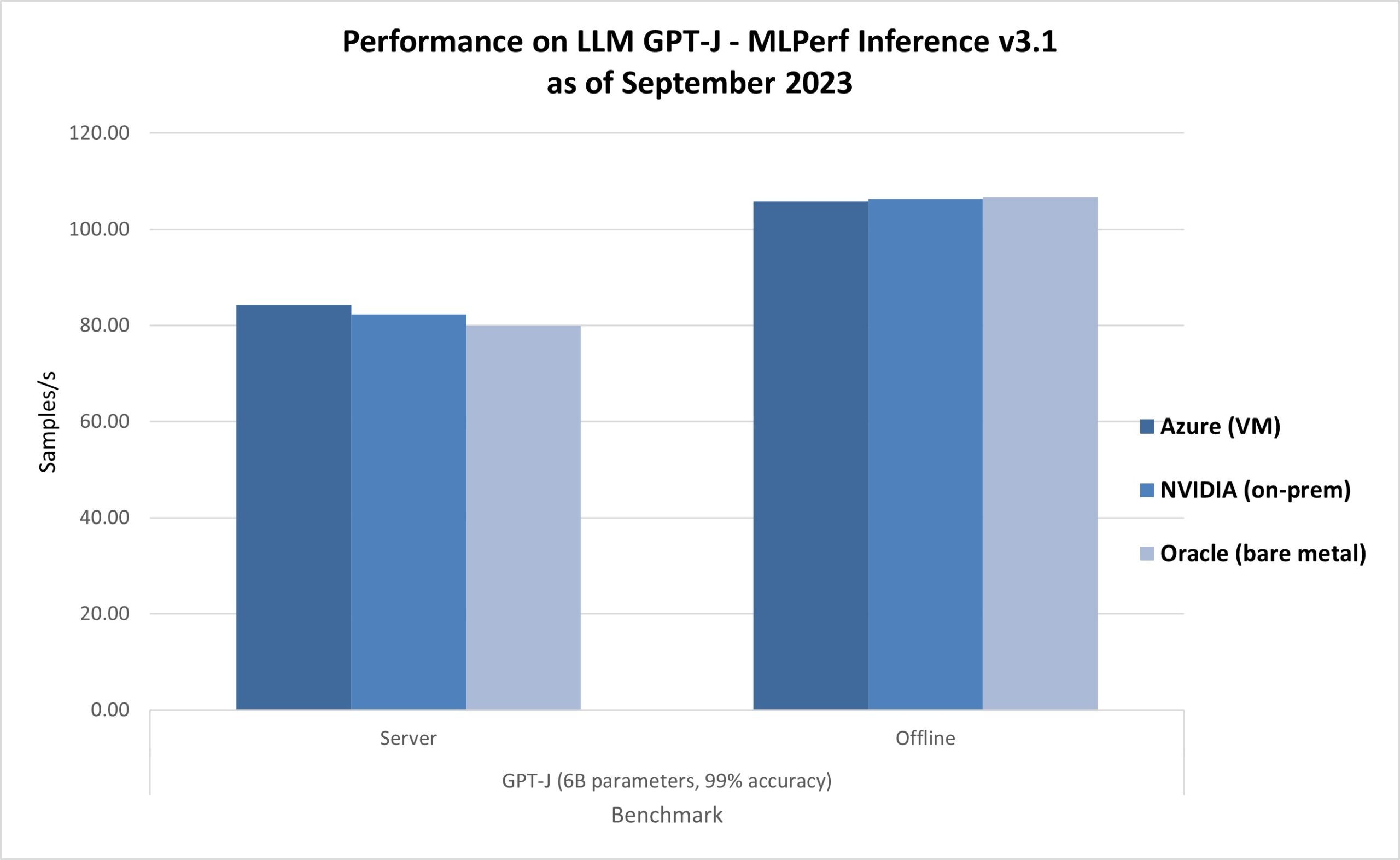

The most recent ends in AI Inferencing on Azure ND H100 v5 VMs present management outcomes as properly, as proven in MLPerf Inference v3.1. The ND H100 v5-series delivered Zero.99x-1.05x relative efficiency in comparison with the bare-metal submissions on the identical NVIDIA H100 Tensor Core GPUs (determine four), echoing the effectivity of digital machines.

In conclusion, created for efficiency, scalability, and flexibility, the Azure ND H100 v5-series gives distinctive throughput and minimal latency for each coaching and inferencing duties within the cloud and gives the very best high quality infrastructure for AI.

Be taught extra about Azure AI Infrastructure

References

- MLCommons® is an open engineering consortium of AI leaders from academia, analysis labs, and business. They construct honest and helpful benchmarks that present unbiased evaluations of coaching and inference efficiency for hardware, software program, and providers—all performed below prescribed circumstances. MLPerf™ Coaching benchmarks include real-world compute-intensive AI workloads to greatest simulate buyer’s wants. Assessments are clear and goal, so know-how decision-makers can depend on the outcomes to make knowledgeable shopping for choices.

[ad_2]

Source link